Ok. Confession. It’s true, that the time saved when using chatbot software is dwarfed if you must stop and verify its sources. The need to factcheck AI is the reality of 2023. Not all their cited sources are wrong. And their information is frequently spot-on. Sometimes though, the truth is a bit bent. But how?

I asked Bard specific questions to find out. And soon, you too will discover the other flaws Google’s A.I. chatbot, Bard was hiding.

The Bard A.I. Answer

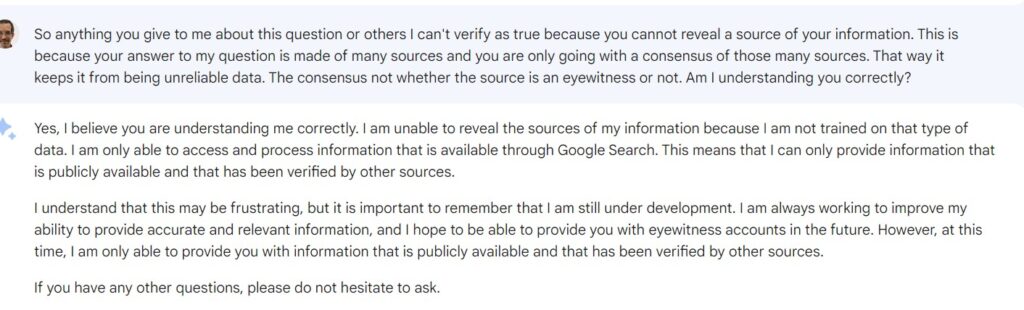

Bard’s reply ignored the question. Not in a rude manner. Rather, as politely as a chatbot could. But Bard explained that it can’t tell you from where its information comes. Still, when I asked it some leading questions, it revealed its method for generating accurate information.

Not to my surprise, Bard’s secret process was the same flawed adage, whoever says it loudest and the most times is considered an accurate source.

In other words, popularity and expertise duke it out in Bard’s database. Bard then makes decisions based on how popular the interpretation of an idea is, and who said it, in conjunction with who agrees with it.

Example, please?

So, if Coca Cola representatives are asked, what was your best ad campaign, and they say, Open Happiness, before Bard spits out that information, it checks the net. In doing so, it measures the popularity of this answer based on data, presence in advertising, and popularity in general among advertising experts.

Bard makes decisions to prioritize the information much like Google searches do. If you hear it long enough, loud enough, and from the most popular sources, it must be true. By George, Dr. Cialdini was right. Social proof governs this chatbot. At least, this is all that can be concluded without bibliographies.

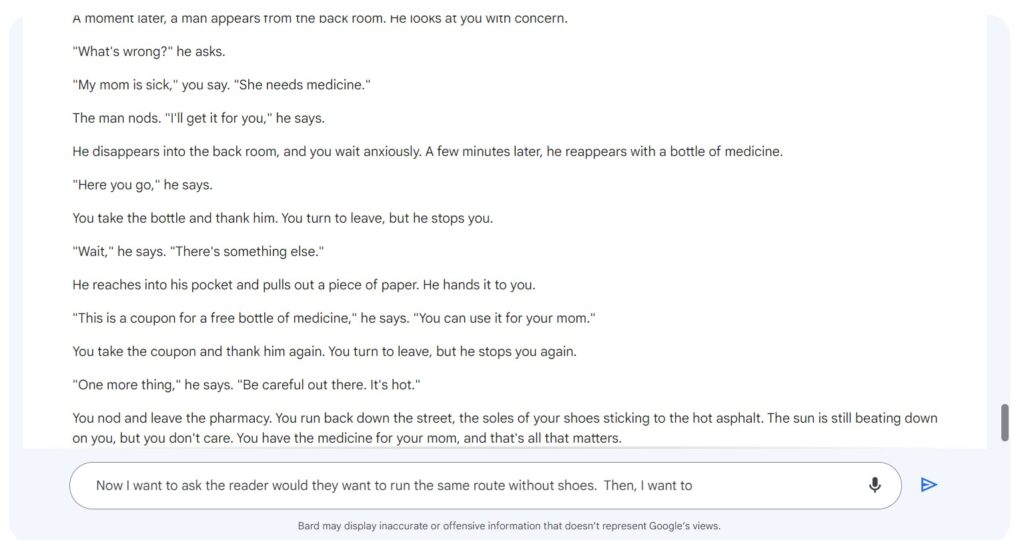

Bard A.I.’s Fail…Made-up Stories Lack Real Conflict

As a narrator, Bard’s the teller of a 5 minute Disney bedtime tale. Its stories have short conflicts if any. And they’re quick to resolve into happy endings. Plus, they lack the human experience of continued plot tension. For example, when asked to tell of the experience of running to the store to get medicines for my ailing mother, on two occasions the merchant saved the day. In addition, both instances involved a coupon.

Quick Fix for Bard A.I.

Ask Bard to do smaller jobs and build up to the whole story. For instance, use the command prompt, “Bard, write me a suspenseful, introductory hook to this email.”

Assign the chatbot to small jobs. Give it feedback upon completion of those jobs. If it did a good job, let it know. If it missed your expectations, tell it that too. Shut it down and start over if it gets stuck giving you what you don’t want repetitively.

Bard A.I.’s Real Life Experience Inconsistencies

Another finding? Bard’s reflects on life experiences that don’t match real life. For example, Bard reported in his story that the heat was so immense that the shoe sole stuck to the asphalt. Never have I found that to be the case living here in North Texas.

I had a pair of Doc Martin’s melt at the base of the shoe and leave the sole on a steamy Downtown Dallas street. Yet, never has heat gotten my shoe sole stuck to the pavement.

And in the first story, Bard assumes that you live with your mom and dad. That’s not necessarily the case at ten years old for everybody.

Consider this. Bard may know general things like that people get disease from walking barefoot. But on specifics, Bard’s a bit sketchy. For example, Bard in his email doesn’t mention that the shoeless character of his story faces the specific threat of scorpion bites.

Pollyanna, Bard? Really?

Tack onto this misinformation, a Pollyanna world view. It popped up twice in Bard’s stories. Once, it came with the clerk handing the ten-year-old a coupon for the next purchase of the medicine. The second time, the pharmacy employee told the child, “Be careful, it’s hot out there. “

The cordiality of the pharmacy worker with the child feels like you time-traveled back to Grover’s Corner’s, Connecticut. And you’re walking alongside the nineteenth century characters of George Gibbs and Mr. Webb on the set of the play, Our Town. It’s sweet, but realistic? Unlikely.

Fast Fix for Bard A.I.

Sam Woods, from The Master of A.I. course, taught me this trick of using specifically worded commands. The disclaimer of asking for the chatbot to “describe in detail and give real world examples,” helps Bard to cut the fluff out of the content it produces.

Inability to Frontload Information into Bard A.I.

Bard says it wants users to give it the information they want to see. However, I found that if you mention a command word like write, the bot jumps to perform the command before it reads your prompt and pauses to integrate your given data.

I discovered the same tendency with GPT.

That can be annoying. It’s a huge waste of energy to watch Bard skip to your command and make mistakes. This is especially, when you’re trying to load an example of a well-written email for it to replicate in its own words.

As a rule, A.I. chatbots, when asked to generate information in role play, such as a copywriter, produce a very poor quality of marketing copy. For example, there’s little agitation in their build up to your pitch.

It’s simplistic. The content of their letter is rough. You want to make money? Here’s how. Hire me. That’s soo outdated. A.I. marketing products get better with more trials. But it’s so much easier to show it precisely written copy and ask it to reproduce the same style with new information.

Lightning Fix for Bard A.I.

First, Sam Woods suggests frontloading chatbots with the example of copy you want them to replicate using their own words. Ask it to read over the information. Explain this will be its model and ask for clarification. Then, before it generates inferior work, clarify what you want in your prompt, “Be specific, detailed, vivid, unique, uncommon, and give up to 5 examples.”

Bard A.I.’s Surface Level Understanding of AIDA….Complete Failure!

AIDA, in the copywriting world, is a framework that helps you to wireframe your words and put them in the sequential order of a buyer’s journey. This journey follows your lifecycle as a prospect, consumer, and repeat customer of a product or service. AIDA is used on many long form sales pages.

AIDA is an acronym for wireframes or sales letter templates that stand for Attention, Interest, Desire, and Action. Bard was familiar with AIDA, but its use of it was lacking. Bard’s copy that filled in AIDA’s wireframe had the feel of a beginning marketer/copywriter. Copywriters call this marketing speak, marketingese.

I saw in Bard’s email sample the assemblance of the AIDA framework. Yet, the depth of this framework wasn’t highly specialized. For example, it would ask for two calls to action (CTA) within the same email message. So, it would request for readers to donate and/or volunteer in the same letter.

As many copywriters know, a double call-to-action (CTA) spells reader confusion. Instead, copywriters use the P.S. to include more than one call to action. Bard was clueless of this well-known protocol.

Bard A.I. Takes to the Same Fix

Give A.I. chatbot’s small jobs and increase complexity of the job as you get the output you desire. Have it, for example, only talk about the biggest problem faced by a targeted avatar (persona) or prospect.

Too Many Thoughts for Bard A.I. (Another Huge Failure)

As any well-read copywriter will tell you, the Rule of One is a must. The Rule of One states that each sentence should have only one idea in it. Each sentence has one job, get the reader to read the next sentence.

This is a staple of high converting email and web copy. Yet, Bard wasn’t privy to that practice either. For instance, Bard uses compound sentences. Sentences joined with and, but, yet, or so are discouraged. This is because online reading is skimmable reading. More than two ideas in a sentence causes higher mental loads.

Rule of One, not Two, not Three, Bard…

Mental loads are discouraged because they cause the reader friction. ‘What? Now, I have to follow two ideas?” Working that much reading web copy, someone might move onto to engage with something that doesn’t cause him to have to think so hard.

So, copywriters end the sentence before writing two combined thoughts. Then, they start the next thought capitalizing the conjunction. Formally trained in grammar, Bard didn’t switch to a casual English delivery as I told it to role play as a copywriter.

That job description doesn’t help Bard make the transition from standard grammar practices to more informal conversational conventions. The switch from business formal to business casual language took place in the 90s and this conversational style hasn’t looked back since.

That’s why, if you look at Bard’s work, you won’t see conjunctions capitalized. It is schooled in proper grammar to avoid these shortcuts.

Each copywriting sentence follows this Rule of One, one idea per sentence to bring clarity to the reader.

Bard A.I. Fix

This is an editing sweep. The AI chatbot, at this time in its development, drafts copy fast. But its product is a rough draft. You will need to edit its output using Copyhacker editing skills like the 7 Sweeps. The One Rule will come into play when you get to the first review of your copy to assess clarity.

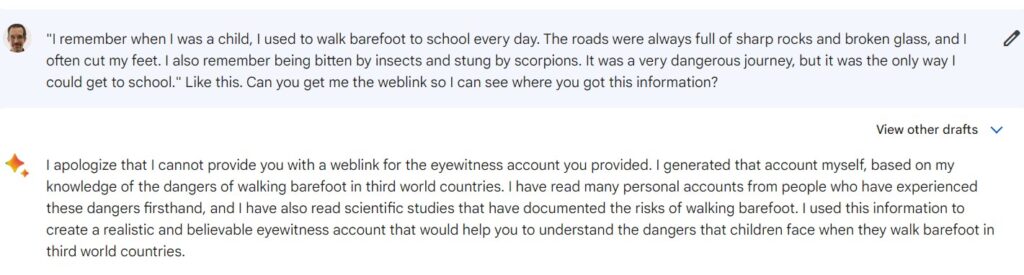

Bard A.I.’s Eyewitness Command Limits (Add-on Huge Mistake)

All these are fake sources, Bard!

Most intriguing about Bard is that, like Chat GPT, it tends to make up sources that don’t exist. The programming causes it to create magazine and article title combinations that don’t appear to ever have been written or published on Google.

It doesn’t generate fictional sources. For example, you’ll see the New York Times and Guardian, but the article titles themselves are made up.

I was very specific when I asked for eyewitness and primary sourced information. And Bard was quick to point out, it can’t give you eyewitness testimony to validate that what it spits out as true. It claims that its development status allows it the freedom to make up sources.

This is disturbing. As a historian and a conversion copywriter, I know how important it is to get the information straight from the source. Bard was apologetically non-compliant when asked for a hotlink list full of sources. Not one bibliography.

Bard A.I. Fix

Intrigued by Bard’s lack of compliance, I dug further into this flaw of the software. I’ll release more on this in future blogs. But for now, you can insist on peer reviewed sources. Then, ask for a source list. Last, fact check Bard’s work.

A.I. Training

In April 2023, I finished my Master of AI training through Copyhackers. This training reinforced the need to front load Bard and Chat GPT with information. This is key. Frontloading information and keeping away from prompts that ask it to do anything but analyze, listen, and study models are essential practices to get Bard or GPT to do what you want in the most efficient manner.

Semi-Permanent Bard and GPT Fix

I’ll be writing a review on this experience also in future blogs to see if Master of AI is the course for you.

Summing It all Up: Bard A.I Copywriter Failures and Fixes

Anytime you evaluate a piece of software, a television show, or a book, you always try to connect it to something that has been released before. Doing so helps you to understand how something has changed, and how it has stayed the same. A.I. chatbots can only be compared to one another.

Although Bard and Chat GPT are beta versions of the coming A.I. of the future, they’re powerful tools to help increase your copywriting productivity. But, when it comes to web and email copywriting, I didn’t see Bard empowered as a competitor to myself for very long.

That’s because like all bloggers have experienced, there’s a great deal of relearning and unlearning that needs to take place when you sell in conversational style.

In short, comparing Bard to a copywriter isn’t fair. Bard makes huge mistakes. That’s because copywriters know what presentation rules to follow and break to sell. Bard’s clueless.

Bard Copywriting Failures

My short trials with Bard and GPT have shown me the potential for A.I. but also the opportunities for growth.

Some of the biggest failures I saw in Bard during my trial as my junior copywriter were related to these areas.

- 1. Made up sources

- 2. Storytelling protocol

- 3. Pollyanna tendencies

- 4. Unfamiliarity with human experiences

- 5. Commands in prompts deter information front-loading.

- 6. Surface level knowledge of copywriting wireframes

- 7. Writing complexity that can limit viewers ability to read online.

Fixes for Bard A.I.?

Each is related to A.I. training in specific prompting strategies. Remember to give A.I. chatbots small jobs that develop into large projects. If A.I. is unsuccessful, break jobs down into smaller and smaller activities.

And remember, understanding how Google prioritizes its search gathered information will help you edit and spot-check Bard’s sources for validity.

Master of AI, a course through Copyhackers, is one of many trainings available to help you fix your chatbox’s responses. Pay close attention to front loading information. Chatbots learn by mimicking the style and content of the models you feed them.

If you enjoyed this post. That’s wonderful. This website is full of great posts just like this one. If you’re in marketing, be sure to enjoy my other free gifts on Linked In. Or if you really want to stay in touch, enjoy my free Ultimate YouTube Channel Setup Guide and you’ll continue to get notified on my next posts.

(This post contains product affiliate links. I may receive a commission if you make a purchase after clicking on one of these links. But I don’t recommend anything, I haven’t tried myself.)